Machine Learning

Co-Pilot, A Machine Learning Based Real-Time Traffic Light Alert on Your Car with Raspberrypi and Coral

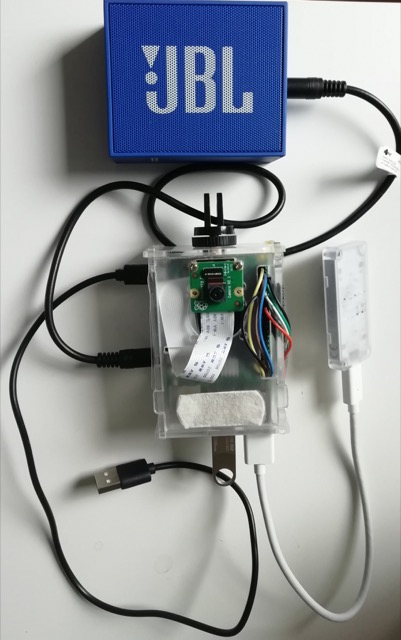

Co-Pilot = Raspberrypi 3/4 + rpi camera + Google Coral TPU = Machine learning based real-time traffic light alert and dash cam in your car. Voice alert supports English/中文.

Co-Pilot can be mounted directly in the wind shield, with the camera facing front. It can be powered by any 5V USB port in car.

It not only serves as a dashcam which records the camera feed, it also alerts the driver in real time about incoming traffic light status. It acts like a co-pilot which can help inattentive driver avoid violating traffic rules.

Watch the demo in car

Motivation

Here is my motivation to start this project: once I was driving, and I was lost in the part of the city, while stressed to find the right way, I accidently crossed a red light. Afterwards, I was thinking what if there were a machine which can remind me a red light, so maybe I can avoid the mistake.

The system does once prevent me to across another red light after I deployed it:). And in the end the alert during the red to green transition (“Ready Go”) turns out to be more useful than the “Attention Red”, which reduce the chance that the driver behind to horn at you, that’s a nice side effect.

On the way of building Co-Pilot, I have to tackle detection, classification, matching, tracking, threading, real time, etc. From problem analysis to finding the right solution.

What Co-Pilot sees

Hardware Setup

- Raspberry Pi 3

- Raspberry Pi Camera v2

- Raspberry Pi 3 case

- Google Coral TPU Accelerator

- 3.5mm audio cable

- JBL GO Speaker

- GoPro mount and 3M tape

- optional: RTC DS3231 (For recording with real timestamp)

- optional: USB pendrive (For bigger storage of recordings)

How it works

Once Co-Pilot is started, the Pi camera will continuously capture video at 1120x624 at 20fps, images will be captured during recording via the video port at 5Hz. The image is then resized to 600x300 as the input for detecting traffic light and classifying its state.

For achieving this, two neural networks are used, a detection net to extract the locations of the traffic lights in the image and a classification net for classifying their states. For detection I used a off-the-shelf pre-trained SSD model, since it is already in the format of edgetpu tflite, it directly runs on Coral accelerator. The input size of the SSD model is only 300x300, therefore the detection net needs to be applied twice to cover the whole image size. With the help Coral it takes ~150ms for the two inferences to complete. However, if we were running the inference purely on CPU it would take 2.3 sec, which is not acceptable for real-time application.

Given the bounding box locations of the detected traffic lights, they are resized to 32x16 and then feed into a custom trained classification net. Its output consists of the probabilities of 11 categories, namely [green, red, yellow, red_yellow, green_left, red_left, green_right, red_right, pedestrian, side, none].

green

red

yellow

red_yellow

green_left

red_left

green_right

red_right

pedestrian

side

none

The classification net is a light weighted CNN which has similar architecture like LeNet. The classification net also runs on Coral TPU.

In the end, a tracker is implemented using Kalman filter and Hungarian algorithm to keep track only the relevant traffic light for the driver. Certain pre-recorded voice alert will be played accordingly based on the state of the relevant traffic light.

Limitations

- Currently works only with vertically placed traffic lights, optimized for Germany.

- Delay of ~0.3 sec for each detection (Rpi 4 might have better performance, didn’t have one to test)

- Can not see traffic light in far range, since the resolution is scaled down to save computation.

- Still some false positive detections, largely due to inaccurate and unstable bounding box detection from the pre-trained model. (There is a plan to retrain a new model)

- ~100ms delay on capturing RGB image, ~100ms delay on transferring data to Coral accelerator. Both problem might be mitigated when depolyed on Pi 4 due to faster processing power and USB 3.0, which can enable higher frame rate.

Deployment Instructions

Dependencies

#on rpi

echo "deb https://packages.cloud.google.com/apt coral-edgetpu-stable main" | tee /etc/apt/sources.list.d/coral-edgetpu.list

curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add -

apt-get update

apt-get install -y libedgetpu1-std

apt-get install -y python3-pycoral

apt-get install -y python3-tflite-runtime

python3 -m pip install -r requirements_pi.txt

sudo apt-get install libsdl2-mixer-2.0-0 libsdl2-2.0-0

Run Co-Pilot

git clone https://github.com/xeonqq/co-pilot.git

cd co-pilot

python3 -m src.main --ssd_model models/ssd_mobilenet_v2_coco_quant_no_nms_edgetpu.tflite --label models/coco_labels.txt --score_threshold 0.3 --traffic_light_classification_model models/traffic_light_edgetpu.tflite --traffic_light_label models/traffic_light_labels.txt --blackbox_path=./

I use superviser to start co-pilot at RPI boot up.

Adjust volume

Once you’ve SSH’d into your Pi, type “alsamixer”. This will bring up an interface within the terminal which will allow you to set the volume of the Raspberry Pi. Simply press the up and down arrow keys to either increase or decrease the volume. When you are done, press ESC.

Test

# under repo root folder

python3 -m pytest

# or

python3 -m tests.test_detection

python3 -m tests.test_classification

Reprocess with recorded video (On Host PC)

Build and run docker container

./build.sh

./linux_run.sh

In docker container

cd workspace

python3 -m src.reprocess --ssd_model models/ssd_mobilenet_v2_coco_quant_no_nms_edgetpu.tflite --label models/coco_labels.txt --score_threshold 0.3 --traffic_light_classification_model models/traffic_light_edgetpu.tflite --traffic_light_label models/traffic_light_labels.txt --blackbox_path=./ --video recording_20210417-090028.h264.mp4 --fps 5

Both main and reprocess can be run without Coral TPU by specifying –cpu option.

MACHINE LEARNING